Day 15: Fitness by Design: How to Shape the Problem to Match Evolution

- Chris Woodruff

- July 28, 2025

- Genetic Algorithms

- .NET, ai, C#, dotnet, genetic algorithms, programming

- 0 Comments

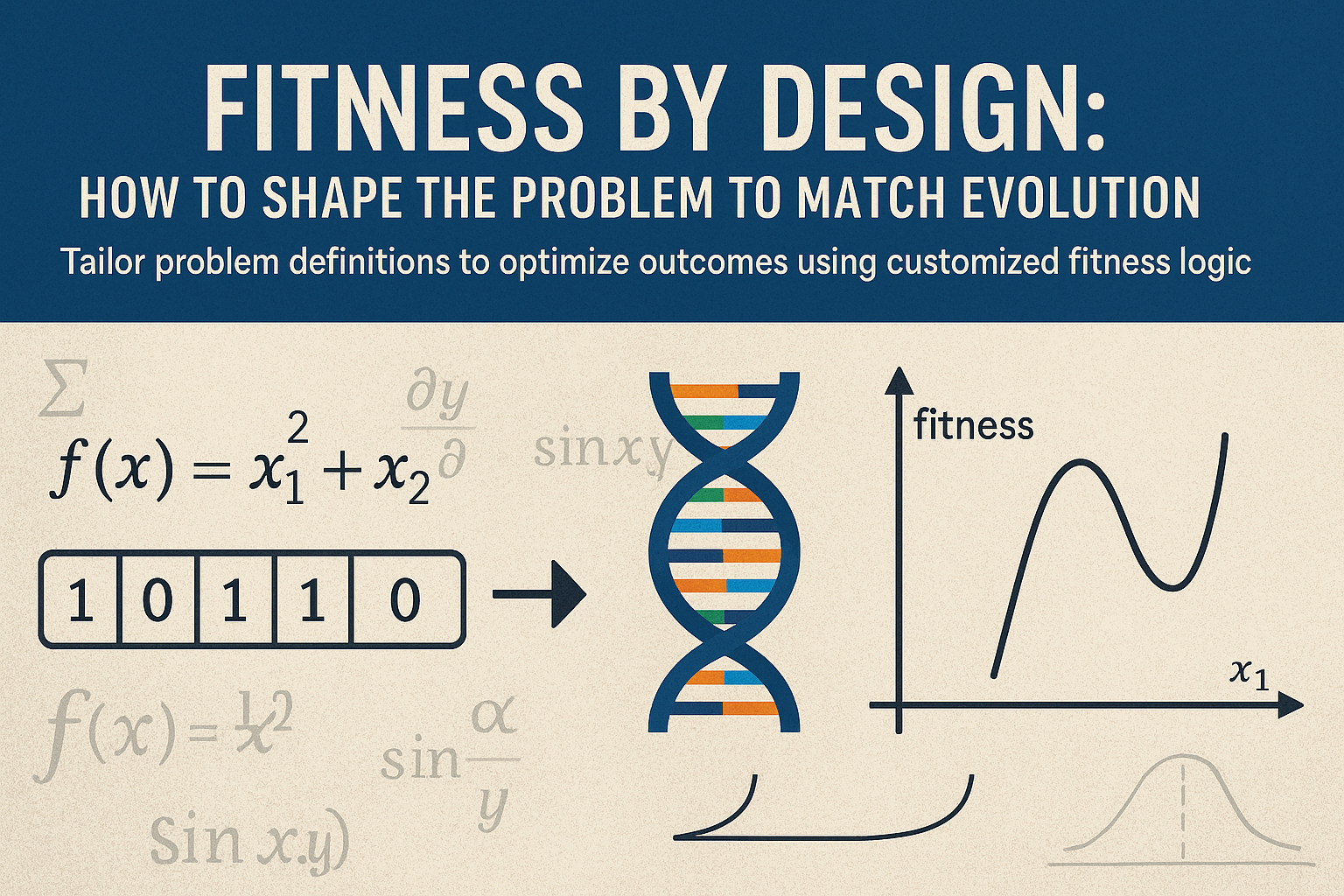

In genetic algorithms, the fitness function is not just a scoring system. It is the definition of success. Your entire evolutionary process hinges on how well the fitness function communicates what “better” means in the context of your problem. If the fitness function rewards the right behaviors, your algorithm will evolve meaningful solutions. If not, you may end up optimizing toward the wrong objective or stuck in a plateau of mediocrity.

This post focuses on how to design fitness functions that align with your goals, reflect nuanced problem definitions, and promote useful evolution. Whether you are evolving strings, optimizing numbers, or solving real-world configurations, the fitness function is where the problem and solution space meet.

Let’s revisit a simple example: evolving a string to match “HELLO WORLD”. The most direct fitness function counts the number of characters that match in the correct position.

public int GetFitness(string target)

{

return Genes.Zip(target, (g, t) => g == t ? 1 : 0).Sum();

}

While effective, this scoring strategy assumes exact positional matching is the only thing that matters. In more complex problems, this kind of binary scoring creates a flat landscape where many near misses get the same score, offering little direction for improvement.

To improve feedback, consider introducing a weighted scoring model. If early characters are more important, or if the structure of the output matters, you can scale the scoring.

public int GetFitnessWeighted(string target)

{

int score = 0;

for (int i = 0; i < Genes.Length; i++)

{

if (Genes[i] == target[i])

{

score += (target.Length - i); // earlier matches are more valuable

}

}

return score;

}

Another technique is to reward proximity rather than exact matches. This softens the fitness landscape and provides gradients that help guide the algorithm toward better solutions.

public int GetFitnessSimilarity(string target)

{

return Genes.Zip(target, (g, t) => 255 - Math.Abs((decimal)(g - t))).Sum();

}

This version scores based on ASCII distance. Even if a character is incorrect, it can still contribute a partial score. This makes it easier for the algorithm to detect improvement when characters are close to correct.

Sometimes the solution needs to meet multiple objectives. For instance, you may want a solution that is both accurate and compact. This can be achieved by combining multiple factors into a composite score.

public double GetCompositeFitness(string target)

{

int accuracy = GetFitness(target);

int diversityPenalty = Genes.Distinct().Count(); // lower diversity = better in this context

return accuracy - 0.1 * diversityPenalty;

}

This composite score favors accuracy while discouraging excessive variation. It is essential to balance the weights carefully so that one metric does not entirely dominate the other.

For structured outputs, invalid gene sequences may need to be penalized. You can modify the fitness score according to the constraints.

public int GetFitnessWithPenalty(string target)

{

int baseScore = GetFitness(target);

bool containsInvalid = Genes.Any(c => !target.Contains(c));

return containsInvalid ? baseScore - 5 : baseScore;

}

This approach introduces constraint handling into the fitness logic, steering the population away from infeasible regions of the solution space.

Effective fitness design is iterative. Monitor the evolution process and watch how solutions change. If evolution is slow or erratic, analyze whether the fitness function effectively distinguishes between better and worse solutions. Small changes in scoring often produce significant improvements in convergence.

Print fitness trends to get insight into algorithm performance.

var average = population.Average(c => c.FitnessScore);

var best = population.Max(c => c.FitnessScore);

Console.WriteLine($"Generation {generation} - Avg: {average}, Best: {best}");

Seeing the delta between average and best fitness can help identify stagnation or dominance in the population. If average fitness is flat while best fitness improves, elitism may be driving most gains. If both are flat, the fitness function may not provide enough gradient.

Remember that genetic algorithms do not understand your intent. They only know how to maximize the function you give them. If you want intelligent evolution, the fitness function must clearly, measurably, and reward incremental progress.

As you build more advanced applications of GAs, investing time in thoughtful fitness design will yield better solutions, faster convergence, and a more adaptive algorithm. Fitness by design is not optional—it is essential.

You can find the code demos for the GA series at https://github.com/cwoodruff/genetic-algorithms-blog-series