Day 34: Genetic Algorithms vs. Other Optimization Techniques: A Developer’s Perspective

- Chris Woodruff

- September 17, 2025

- Genetic Algorithms

- .NET, ai, C#, dotnet, genetic algorithms, programming

- 0 Comments

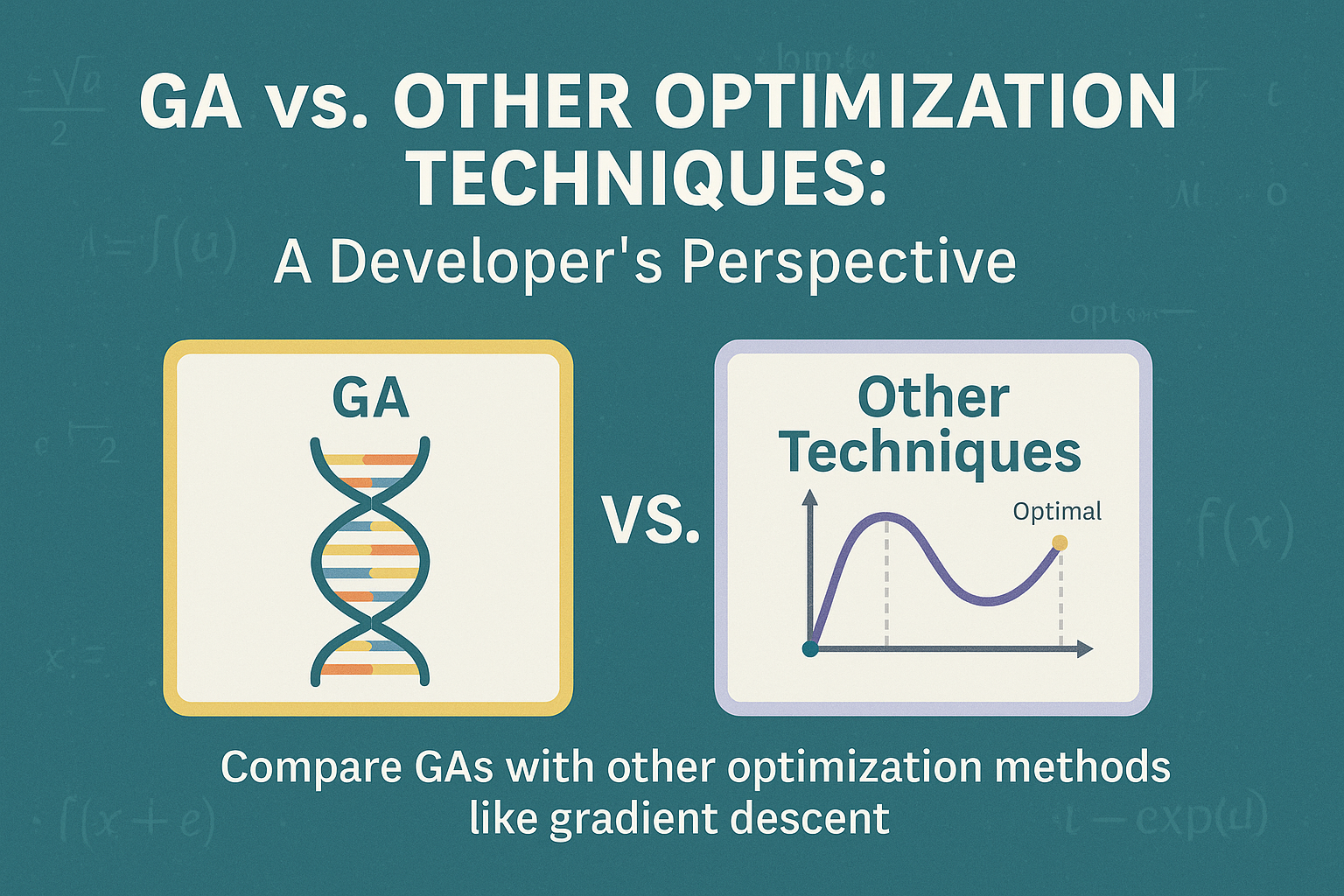

Genetic Algorithms (GAs) are a powerful optimization strategy inspired by the principles of natural evolution. But they are far from the only technique in a developer’s toolbox. In this post, we will compare Genetic Algorithms with other widely-used optimization methods such as Gradient Descent, Simulated Annealing, and Particle Swarm Optimization. The goal is to understand when to use GAs and how they differ in behavior, complexity, and application suitability.

Genetic Algorithms: Population-Based and Stochastic

GAs maintain a population of candidate solutions that evolve over generations. At each iteration, individuals are selected, combined (crossover), and mutated to explore the solution space. GAs are particularly effective in large, complex, and poorly understood spaces, especially where the objective function is discontinuous, non-differentiable, or noisy.

public class Chromosome

{

public double[] Genes { get; set; }

public double Fitness { get; set; }

}

public class GeneticAlgorithm

{

private List<Chromosome> population;

public void Evolve()

{

// Selection, crossover, mutation logic

}

}

Gradient Descent: Deterministic and Derivative-Based

Gradient Descent is a first-order optimization algorithm used primarily in convex optimization and machine learning. It relies on the gradient (partial derivatives) of the objective function to determine the direction of steepest descent.

public static double GradientDescent(Func<double, double> func, Func<double, double> derivative, double initialGuess, double learningRate, int iterations)

{

double x = initialGuess;

for (int i = 0; i < iterations; i++)

{

x -= learningRate * derivative(x);

}

return x;

}

Gradient Descent is efficient and precise for smooth functions, but it struggles with local minima, discontinuities, or non-differentiable problems. In contrast, GAs can handle these with ease but usually require more computation time.

Simulated Annealing: Probabilistic with Decaying Temperature

Simulated Annealing is a single-solution metaheuristic inspired by the annealing process in metallurgy. It explores the solution space by probabilistically accepting worse solutions to escape local optima, gradually reducing the acceptance rate as the temperature cools.

public static double SimulatedAnnealing(Func<double, double> costFunction, double initialSolution, double initialTemp, double coolingRate)

{

double current = initialSolution;

double best = current;

double temp = initialTemp;

Random rand = new Random();

while (temp > 0.1)

{

double next = current + rand.NextDouble() * 2 - 1; // small perturbation

double delta = costFunction(next) - costFunction(current);

if (delta < 0 || Math.Exp(-delta / temp) > rand.NextDouble())

{

current = next;

if (costFunction(current) < costFunction(best))

best = current;

}

temp *= coolingRate;

}

return best;

}

Simulated Annealing and GAs both avoid local minima but differ in structure. GAs use populations and recombination while Simulated Annealing works with a single candidate and a cooling schedule.

Particle Swarm Optimization: Inspired by Flock Behavior

Particle Swarm Optimization (PSO) is inspired by the behavior of birds and fish. It maintains a population (swarm) of particles that update their positions in the search space based on their own experience and that of their neighbors.

public class Particle

{

public double Position { get; set; }

public double Velocity { get; set; }

public double BestPosition { get; set; }

}

PSO and GAs both operate on populations, but PSO emphasizes sharing information among particles, which tends to make convergence faster for many problems. GAs, with their mutation and crossover mechanisms, promote diversity better and are often more robust for deceptive landscapes.

Summary of Differences

| Feature | Genetic Algorithms | Gradient Descent | Simulated Annealing | Particle Swarm Optimization |

|---|---|---|---|---|

| Nature | Population-based | Single-solution | Single-solution | Population-based |

| Uses Derivatives | No | Yes | No | No |

| Handles Local Minima | Yes | No | Yes | Yes |

| Suitable for | Complex, rugged search spaces | Smooth, convex functions | Problems with many local optima | Continuous optimization |

| Stochastic | Yes | No | Yes | Yes |

| Parallelizable | Highly | Somewhat | Somewhat | Highly |

When to Use Genetic Algorithms

Use GAs when:

- The objective function is unknown or difficult to express mathematically

- The solution space is vast and multi-modal

- Other optimization methods fail to escape local optima

- You need to optimize multiple conflicting objectives

- You value diversity and exploration over convergence speed

Final Thoughts

GAs are not a silver bullet, but they shine in domains where structure is poorly understood or traditional methods cannot be applied. As a developer, understanding the trade-offs between different optimization techniques allows you to select the right approach based on the problem characteristics.

In future posts, we will look at building hybrid models that combine the strengths of different optimization techniques including GAs, hill climbing, and machine learning.